The development of AI-powered assistive technologies help with accessibility and inclusivity for individuals with disabilities. Early advancements in AI dating back to the 1950s have revolutionized aspects of disabled individuals’ daily lives by creating tailored solutions that address unique needs and abilities. Some of these tailored solutions involve software or physical devices. Many of these advancements are used by those without disabilities in their day to day lives such as subtitles or speech recognition software. Yet, all of these advancements can help make technology more accessible.

Assistive Technologies Powered by AI

Screen Readers

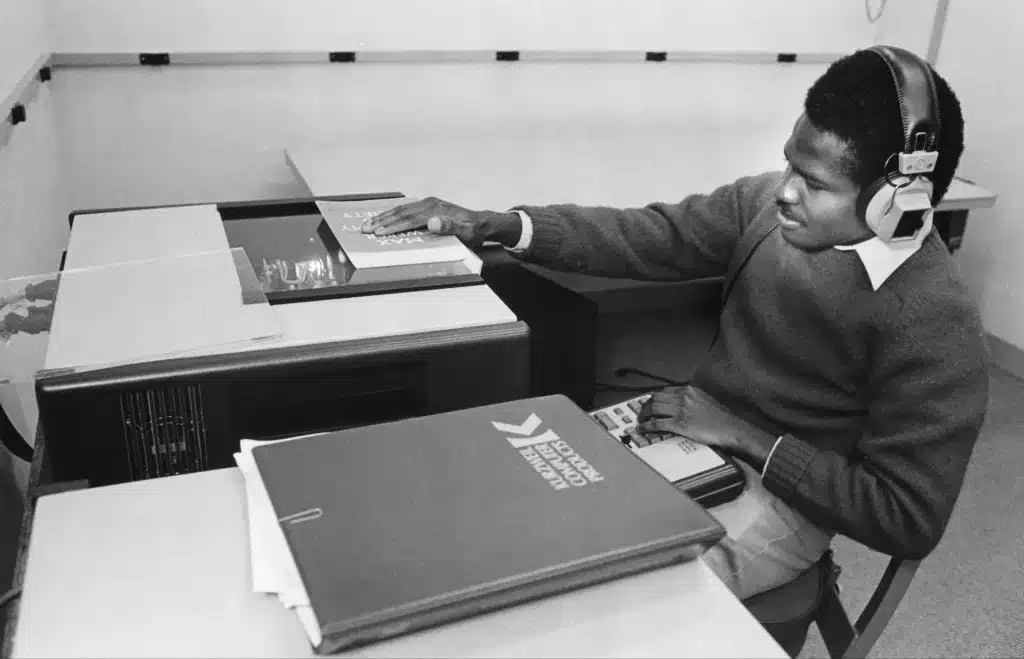

Screen readers, which are assistive technology tools that enable individuals with visual disabilities to access and navigate digital content, have evolved over the span of the last 50 years starting in the 1970s. In 1976, Ray Kurzweil developed the first commercially available screen reader named the Kurzweil Reading Machine, which primarily focused on text-to-speech capabilities for printed documents.

Along with Kurzweil, Dr. Jim Thatcher played a pivotal role in developing the initial screen readers designed for digital interfaces. Dr. Thatcher’s groundbreaking creation, the prototype PC-SAID (Personal Computer – Synthetic Audio Interface Driver), eventually transformed into the IBM Screen Reader during the early 1980s. This product became widely recognized and established as a pioneering example in the domain of assistive technology for accessing digital content. As a result, screen reader became the widely used term to refer to the entire class of assistive technologies aimed at facilitating accessibility for individuals with visual disabilities.

Enhancing the accuracy of screen readers through AI

Over the years, screen readers have undergone significant advancements in functionality and integration with operating systems and digital platforms. Contemporary screen readers such as JAWS (Job Access With Speech), NVDA (NonVisual Desktop Access), and VoiceOver (Built-in with Mac Computers; To turn on VoiceOver, press Command-F5) have become widely used and continue to evolve with the complexity of digital content and technologies.

AI algorithms have significantly improved the accuracy of screen readers, which assist blind and low-vision individuals in navigating website content. With AI, screen readers can better identify webpage elements, create precise text-to-speech conversions, and offer more accurate descriptions of what the user cannot see on screen. This advancement simplifies users’ interactions and allows individuals with visual disabilities to access the information they need.

Speech Recognition Software

Speech recognition software provides individuals with disabilities an alternative method of communication and control for the user. It enables those with mobility disabilities to interact with computers or devices in different ways to overcome challenges posed by input devices such as keyboards or mice.

Speech Recognition Software is widely used in devices like smart speakers, mobile phones, and virtual assistants for voice-based interactions such as Amazon’s Alexa or Apple’s Siri. Automotive systems incorporate this technology for voice command features, while dictation software accurately converts spoken words to text. It benefits both disabled individuals in performing tasks using voice and abled individuals by offering hands-free control across various applications.

For individuals with speech or language disabilities, speech recognition software allows:

- Hands-Free Control: It allows individuals with mobility disabilities to operate devices and navigate digital content using voice commands, eliminating the need for manual input.

- Communication through Technology: Speech recognition software assists those with speech or language disabilities by converting spoken words into written text in real-time, facilitating effective communication in various settings.

- Audio Feedback: It enhances accessibility for blind and low-vision users by providing audio feedback, enabling them to interact with digital content and perform tasks without relying solely on visual cues.

Brief History of Speech Recognition Software

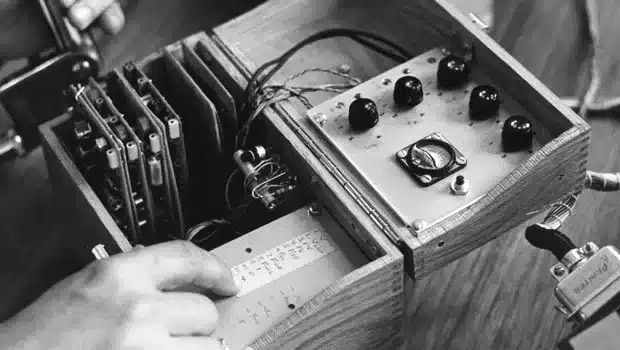

The development of speech recognition technology began in the 1950s, but it wasn’t until the 1960s that the first practical speech recognition systems started to emerge. IBM released their “Shoebox” device in 1961, which could recognize and understand a limited set of spoken digits. However, these early systems had limited accuracy and were computationally expensive.

William Dersch’s Shoebox listened as the operator spoke numbers and commands such as “Five plus three plus eight plus six plus four minus nine, total,” and would print out the correct answer: 17

Throughout the 1990s and early 2000s, speech recognition software continued to advance, with companies like Dragon Systems (later acquired by Nuance Communications) playing a significant role in commercializing the technology. Speech recognition software became more affordable, accessible, and capable of handling larger vocabularies.

Currently, AI-powered speech recognition systems can now handle complex and nuanced speech patterns, accents, and variations in intonation. The training data used for AI models enables continuous learning and refinement, allowing the software to adapt to individual users’ voices and speech patterns over time. Additionally, AI algorithms can analyze context and semantics, aiding in improved accuracy and reducing errors in transcription.

Some examples of Speech Recognition Software include:

- Dragon NaturallySpeaking: Developed by Nuance Communications, Dragon NaturallySpeaking is a widely used speech recognition software for Windows computers. It offers accurate transcription, voice commands, and hands-free control of your computer.

- Windows Speech Recognition: Integrated into Windows operating systems, Windows Speech Recognition enables users to control their computers using voice commands.

- Apple Dictation: Built-in to Apple’s macOS and iOS devices, Apple Dictation provides speech recognition capabilities for text input. It allows users to dictate text in various applications, including messaging, word processing, and email.

- Google Docs Voice Typing: Google Docs offers a voice typing feature that allows users to dictate their text directly into a Google Docs document. It is accessible through the Google Docs web interface and works on different platforms.

Vision Enhancement

AI-based vision enhancement systems improve information for individuals with visual disabilities through computer vision and image recognition algorithms. These technologies assist with object and text recognition, navigation, and identification. Deep learning algorithms and neural networks revolutionized computer vision in the 2010s, allowing systems to automatically extract meaningful features from visual data and enhance accuracy and performance.

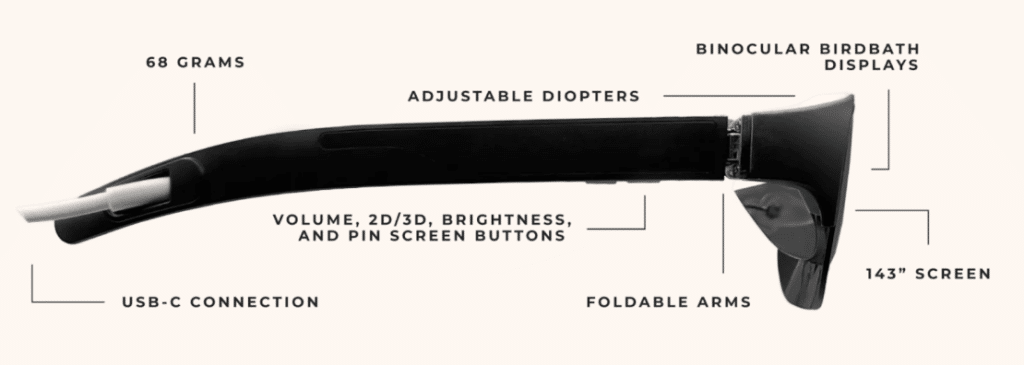

AI algorithms process visual information from cameras or wearable devices like smart glasses, like NuEyes or Aira. These devices use computer vision algorithms in real time to provide augmented reality overlays, magnification, color enhancements, and text recognition for blind and low-vision users. Another way to help with accessible vision enhancements, without a wearable device, is to make sure your web pages are meeting color contrast standards.

Additionally, AI-powered image recognition systems can analyze retinal images for early diagnosis and monitoring of eye diseases like diabetic retinopathy, macular degeneration, and glaucoma.

Ongoing advancements in AI and computer vision offer promising prospects for better understanding 3D scenes, improved object recognition, and the potential development of bionic vision systems. It is important to note that AI-powered vision enhancement is a rapidly evolving field with continuous research and technological advancements shaping opportunities for individuals with visual disabilities.

AI-Enhanced Content and Accessibility

Automated Generation of Alternative Text

What is alt-text?

Alt-text, the shortened name for alternate text (sometimes called alt tags), is a text description of an image that appears online. It is located in the <img> HTML tag, and is generally not visible on a web page unless the image is otherwise unable to load. Its purpose is to convey the information of the image to users who cannot see it. This may include blind or low vision users and users with poor internet connection when images cannot be loaded, and also includes search engine bots.

How can AI help with generating alt-text?

Understanding image content poses a critical challenge for individuals with visual disabilities. AI-driven solutions enable websites to automatically generate alternative text (alt text) for images. By analyzing the content of images, AI algorithms can accurately produce descriptions, enabling blind and low-vision users to comprehend visual context without relying solely on sight.

While the accuracy and effectiveness of these automated solutions may vary, here are a few examples of tools or platforms that offer automatic alt-text generation:

- Microsoft Seeing AI: Seeing AI is a mobile app developed by Microsoft that uses AI to provide audio descriptions of the surrounding environment for individuals with visual disabilities. It can also automatically generate alt text descriptions for images captured by the device’s camera.

- Google Photos: Incorporates AI algorithms to automatically generate alt text for images uploaded to the platform. The generated descriptions can be utilized to improve accessibility for individuals who rely on screen readers or other assistive technologies.

- Adobe Sensei: Adobe Sensei, an AI and machine learning framework, includes features designed to assist with accessibility. Adobe’s applications, such as Photoshop, incorporate AI technology to automatically suggest alt text for images, providing an easier way to add accessibility features.

It’s worth noting that while these automated tools can be beneficial and save time, it’s still important to review and manually adjust the generated alt text to ensure accuracy and specificity, as automated systems may not always capture the full context or details of an image.

Real-time Transcription for Multimedia Content

AI in Closed Captioning and Subtitling

Closed captioning and subtitling have witnessed remarkable improvements with the integration of artificial intelligence (AI) technology. AI has revolutionized these processes, enhancing accuracy, efficiency, and accessibility.

With AI algorithms, real-time speech recognition and natural language processing have seen significant advancements. AI-powered systems can now automatically transcribe spoken dialogue, accurately detect speakers, and generate near real-time captions or subtitles. Machine learning techniques enable continuous learning and adaptation to different languages, accents, and speech patterns.

AI-powered transcription tools extend web accessibility to multimedia content by generating real-time captions for videos, audio files, and podcasts. This empowers individuals who are deaf or hard of hearing to fully engage with multimedia content. It also benefits those who prefer reading along or require subtitles in noisy environments.

AI Technology Offers Immense Potential For Enhancing Web Accessibility

AI technology presents an immense potential for enhancing web accessibility. As the capabilities of AI continue to advance, it has become a powerful tool in overcoming barriers that individuals with disabilities face when accessing online information and services. The ability of AI algorithms to analyze and interpret data allows for the development of innovative solutions to make websites more inclusive and user-friendly. Furthermore, the continuous evolution of AI technology promises even greater advancements in web accessibility, offering the opportunity to create a more equal and inclusive digital environment for all.