What Google’s algorithm leak may reveal about getting cited by ChatGPT, Perplexity, and why it’s not too dissimilar from SEO.

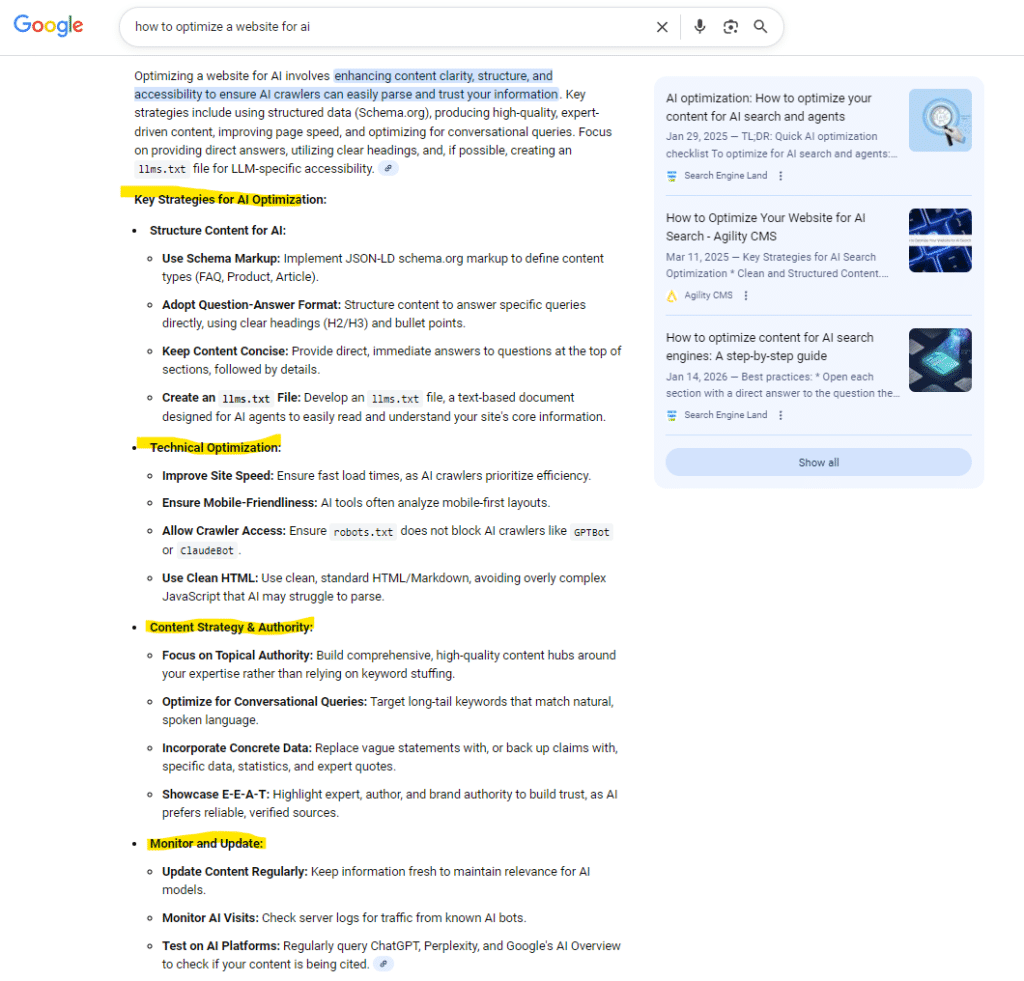

In 2026 we’re neck deep in AI. As AI gobbles up traditional search marketing, we’re digging deeper into what it takes to optimize a website for AI search optimization(GEO… AEO). Answer engine optimization (AEO) has matured over the past 6-12 months where a real, documented methodology is emerging around how to optimize your website for AI Search.

But SEO isn’t dead yet, and as we learn more, we’re learning just how much overlap exists between SEO and AEO (so much so that my preferred shorthand is AiSEO). A study by Brightedge found that 52% of AI Overview citations came from URLs already ranking in top 10 organic positions, illustrating how much overlap exists between organic SEO and AI overviews.

It’s becoming clear that AI search engines and Google are measuring similar quality signals—just weighted differently. For years, SEOs have been reverse-engineering the black box of Google Search with equal measure intuition and experimentation. That all changed in May 2024 when Google’s ranking signals were leaked.

In this article, we’ll walk through leaked and verified Google ranking signals, how those same practices are reflected in both proven and experimental AI optimization techniques, and how a better understanding of how Google search works can help us understand AEO as well.

How AI Search Actually Works

If you’re going to optimize for AI search, it helps to first to understand how these systems retrieve and process information. The challenge is that each platform works differently—and the landscape has shifted significantly even in the past year.

Where LLM platforms get their information

Here’s where things get practical. Each major AI search platform has a primary data source, and if you’re not indexed by that source, you won’t appear in that platform’s results.

| Platform | Primary Data Source | What This Means |

| ChatGPT | Bing index | If Bing hasn’t indexed your page, ChatGPT can’t cite it. OpenAI’s VP of Engineering confirmed Bing is “an important” part of their search functionality. |

| Perplexity | Own index + real-time crawling | Perplexity runs its own crawler (PerplexityBot) and uses what they call “sub-document processing“—indexing granular snippets rather than whole pages. |

| Google AI Overviews / AI Mode | Google’s index + Knowledge Graph | Uses a “query fan-out” technique, running multiple related searches simultaneously to construct comprehensive answers. |

| Claude | Brave Search | Instead of Google or Bing, Claude pulls from Brave’s independent index—which has its own signals and ranking logic. |

| Microsoft Copilot | Bing index | Built directly on Bing’s infrastructure, blending traditional search with generative AI responses. |

The practical implication: you may need to verify indexation across multiple search engines to ensure visibility across AI platforms. Bing Webmaster Tools suddenly matters a lot more than it did two years ago.

The Crawlers to Know About

If you want to verify AI crawlers can access your content, you’ll need to know their user agents. Make sure these aren’t blocked in your robots.txt:

- GPTBot (OpenAI/ChatGPT)

- ChatGPT-User (ChatGPT browsing)

- PerplexityBot (Perplexity)

- ClaudeBot / Claude-Web (Anthropic)

- Google-Extended (Gemini/AI training, though blocking this won’t affect AI Overviews)

A word of caution: some site owners are blocking AI crawlers out of concern about content being used for training. That’s a legitimate choice, but understand the tradeoff. Blocking these crawlers may reduce your visibility in the AI search products those companies operate.

How information retrieval actually works

Understanding retrieval mechanics helps explain why content structure matters so much.

Perplexity has been most transparent about their search architecture. Traditional search engines index and rank whole pages. Perplexity uses “sub-document processing”—indexing granular snippets rather than full documents. When you query their system, it retrieves around 130,000 tokens of the most relevant snippets to feed the AI.

As for the index technology, the biggest difference in AI search right now comes down to whole-document vs. “sub-document” processing.

Traditional search engines index at the whole document level. They look at a webpage, score it, and file it.

When you use an AI tool built on this architecture (like ChatGPT web search), it essentially performs a classic search, grabs the top 10–50 documents, then asks the LLM to generate a summary. That’s why GPT search gets described as “4 Bing searches in a trenchcoat” —the joke is directionally accurate, because the model is generating an output based on standard search results.

Jesse Dwyer, Perplexity interviewed on SEJ

The system is literally extracting chunks of your content. If your key information is buried mid-paragraph, it may not get selected.

Google takes a different approach. AI Overviews and AI Mode use “query fan-out”—issuing multiple related searches across subtopics simultaneously to construct an answer. You’re not just trying to rank for one query anymore; you’re trying to be the best answer for a cluster of related queries.

Why traditional SEO still matters (but isn’t enough)

Traditional SEO isn’t dead—it’s foundational. Google’s documentation confirms: to appear in AI Overviews or AI Mode, a page must be indexed and eligible to show a snippet.

But there’s a crucial difference in what happens next.

Traditional search lists and links—the user chooses. AI search synthesizes and cites—the AI chooses what to extract.

Being “good enough to rank” gets you into the consideration set. But being “good enough to cite” is what actually gets you into the response. A page can rank #3 and never get cited if its content isn’t structured for easy extraction or doesn’t directly answer the question.

In the next section, we’ll get into the specific signals Google’s API leak revealed that they’re measuring, and how those signals also map to what AI search engines likely value too.

Four core AI search optimization tactics

Each GEOAEO tactic below is fairly agreed upon by practitioners. But are these new tactics, or is this the same old SEO playbook, dusted off and repackaged for AI? We’ll let you be the judge— alongside each AEO principle is a ranking factor confirmed as part of the Google leak.

Tactic 1: Content Structure

Optimizing your content structure will help AI ingest your content and increase your brand’s mentions and citations. Well-structured content will be easier for both bots and humans to read and understand.

Here are a few practices to improve your content structure to optimize for AI:

- Front-load key information in every section, don’t bury the lede. This is really important. If you’re making a claim or suggesting you’re going to answer a question, don’t bury it beneath a long winded introduction or in the middle of a large paragraph.

- Use headers that align with users’ questions (and query fan out). Do your research to understand the topic and what questions people might have about it. Do your best to align your page or article around this framework. If you want to understand how Google thinks about a topic’s related searches, do a few searches and look at the AIOs to see what subtopics are included in their answer.

- Create self-contained sections that can be extracted independently. This goes back to the idea of sub-document processing and passage indexing. AI is going to combine information from multiple sources to address each subtopic. The stronger you optimize for each subtopic within your pages, the greater chance you have for a mention or citation for each.

- Include direct answers near the top of relevant pages. Don’t mince words when answering a question. Check out two versions of the same answer below.

Example question: Can you optimize for ChatGPT searches?

Meandering answer:

- “The question of whether you can optimize your website for ChatGPT is one that many marketers are asking these days. It’s a complex topic with a lot of nuance. There are several factors to consider when thinking about this, including how ChatGPT retrieves information, what sources it tends to cite, and whether traditional SEO practices still apply. Some experts believe that optimizing for AI is essentially the same as optimizing for Google, while others argue there are important differences to consider. The short answer is yes, there are things you can do, but it depends on your goals and what kind of content you’re creating.”

Direct, citation-optimized answer:

- “Yes, you can optimize your website for ChatGPT. ChatGPT pulls information from Bing’s index, so ensuring your pages are indexed by Bing is the first requirement. Beyond indexation, structure your content with clear headings, front-load direct answers, and include the statistics and specifics that AI systems favor when selecting sources to cite.”

What’s the SEO overlap, according to Google’s API leak?

Below are a few items from the leak that have symmetry with the content structure tactic for AI.

- leadingText — Google explicitly stores the page’s leading text. By frontloading key information, you’re optimizing for both Google and AI.

- numTokens — Maximum tokens processable per page (documents get truncated). Like Google, LLMs have constraints around context windows. The longer a page, the higher the chance the entire page won’t get ingested or will get compressed. The order of your content matters.

- titlematchScore — How well title tags match user queries (tracked at page AND site level). If you say a page is about something and then you do a poor job covering that topic, that hurts your website. Similar to AI, are you claiming to answer a question only to start off with meandering prose?

Research supports this strategy for AI optimization

Through rigorous evaluation, a team of researchers from Princeton demonstrated that GEO can boost visibility by up to 40% in generative engine responses. Their research found that authoritative, well structured articles were more likely to be cited by AI.

Tactic 2: Semantic Depth and Topical Coverage

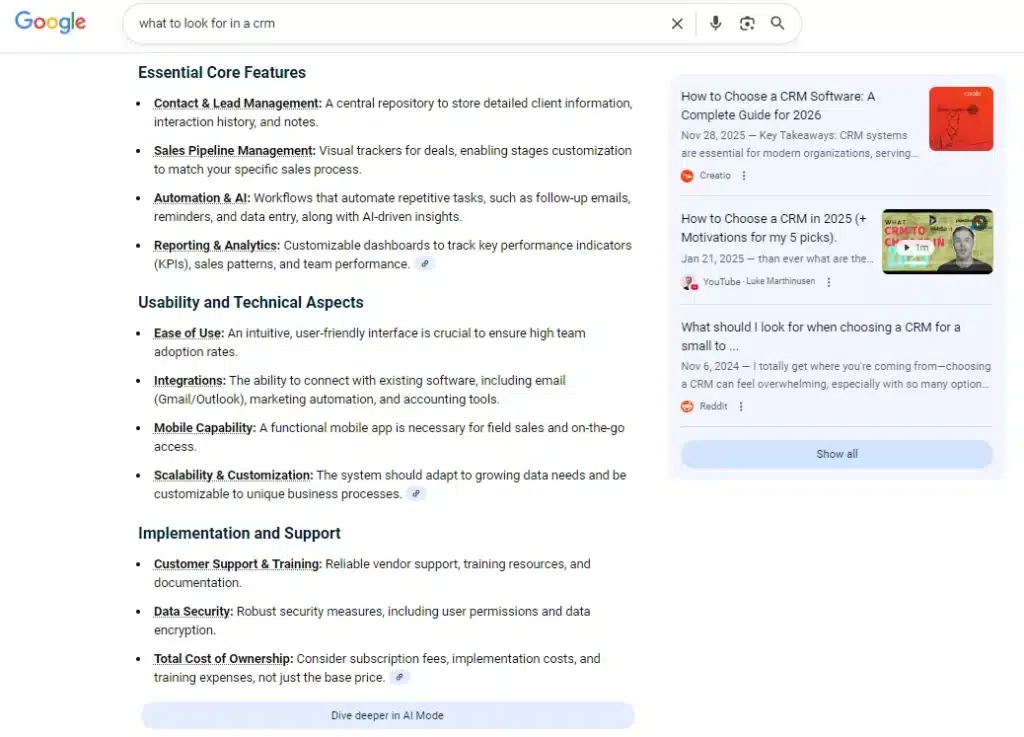

LLMs don’t just match keywords—they understand topics. When you search for something like “best CRM for small business,” the AI isn’t looking for pages that repeat that phrase. It’s looking for content that comprehensively covers the topic: pricing considerations, integration capabilities, ease of use, scalability, support options. If your page only covers half of what users expect, you’re less likely to get cited.

Here are a few practices to improve your semantic depth:

- Map the full semantic field before you write. Before creating content, research what subtopics users expect. The easiest way to do this: search your target query and look at what Google’s AI Overview includes. Those subtopics are what Google (and likely other AI systems) consider essential to a complete answer. You can also use tools like AlsoAsked or look at “People Also Ask” boxes to map related questions.

- Include the terms users expect—but naturally. This isn’t about keyword stuffing. In fact, the Princeton GEO research found that keyword stuffing performs worse than baseline in AI search. Instead, it’s about ensuring your content includes the concepts and terminology that signal expertise. If you’re writing about email marketing, you should probably mention deliverability, open rates, segmentation, and automation—because that’s what a comprehensive piece would cover.

- Stay topically focused. Google’s siteFocusScore measures how much a site concentrates on specific topics. Sites that try to cover everything tend to have weaker topical authority than sites that go deep on fewer subjects. The same logic applies to individual pages—don’t dilute a focused article with tangential content.

- Add statistics and data points. The Princeton research found that adding relevant statistics was one of the highest-performing optimization strategies for AI visibility. Concrete numbers give AI systems something specific to cite and signal that your content is well-researched.

Example: Thin vs. semantically rich content

Thin coverage:

- “CRM software helps businesses manage customer relationships. There are many CRM options available. You should choose one that fits your needs and budget.”

Semantically rich coverage:

- “CRM software centralizes customer data, automates sales workflows, and tracks interactions across email, phone, and chat. For small businesses, key considerations include per-user pricing (typically $12-$79/month), native integrations with tools like Gmail and QuickBooks, and implementation complexity. Salesforce dominates enterprise, but HubSpot and Pipedrive often better serve teams under 50 users due to simpler onboarding.”

The second version covers pricing, integrations, use cases, and specific products—the subtopics AI would expect in a comprehensive answer.

What’s the SEO overlap, according to Google’s API leak?

- QBST (Query-Based Salient Terms) — Google trains models on what terms should appear for specific queries. If you’re missing expected terms, you’re signaling incomplete coverage.

- siteFocusScore — How concentrated your site is on specific topics. Topical authority matters for both Google and AI citation likelihood.

- DeepRank / BERT integration — Google’s language understanding is deeply tied to ranking. Semantic relevance isn’t a nice-to-have; it’s how the algorithm works.

Research supports this strategy

The same Princeton GEO study we linked earlier found that combining fluency optimization with statistics addition outperformed single-tactic approaches by 5.5% or more. The researchers specifically noted that keyword stuffing hurt performance—semantic depth isn’t about repetition, it’s about comprehensive coverage.

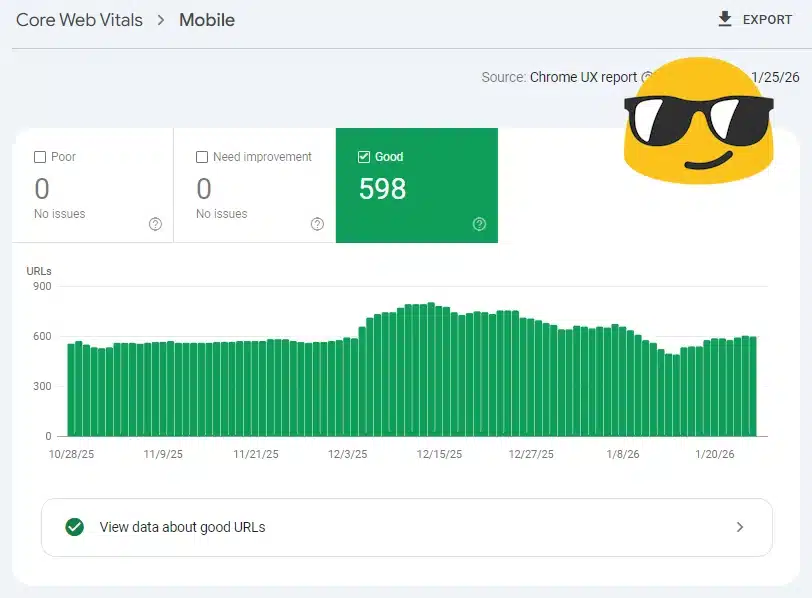

Tactic 3: Technical Foundation

This one is less exciting than content strategy, but it’s often where optimization efforts fail before they start. If AI crawlers can’t access your content, nothing else matters.

Here are the technical fundamentals:

- Verify you’re indexed where it matters. ChatGPT and Copilot pull from Bing. Claude pulls from Brave. AI Overviews pull from Google. If you’ve been ignoring Bing Webmaster Tools for years, now’s the time to check it. A page that ranks well on Google but isn’t indexed by Bing won’t appear in ChatGPT responses.

- Ensure server-side rendering for critical content. Most AI crawlers don’t execute JavaScript the way a browser does. If your key content loads dynamically via JS, crawlers may see an empty page. Server-side rendering (SSR) or static generation ensures your content is visible to all crawlers.

- Implement structured data. Schema markup helps AI systems understand what your content is and how entities relate to each other. Prioritize: Article, FAQPage, HowTo, Organization, Person, and Product schemas depending on your content type. This isn’t just for rich snippets anymore—it’s how you make your content machine-readable.

- Check your robots.txt. Some site owners have blocked AI crawlers (GPTBot, ClaudeBot, PerplexityBot) either intentionally or accidentally. Cloudflare has one-click feature that will block all AI bots from crawling your site. Vital for media companies who want to protect their content, but if you want AI visibility, make sure you’re not blocking the crawlers that feed those systems.

- Maintain Core Web Vitals. Page speed and user experience signals influence crawl priority. Google’s leak confirmed that frequently-updated, high-quality content gets preferential storage (flash vs. HDD in their infrastructure). While we can’t know exactly how AI systems weight speed, slow pages have always been at a disadvantage for crawling.

Quick audit checklist

- Pages indexed in Google Search Console

- Pages indexed in Bing Webmaster Tools

- GPTBot, ClaudeBot, PerplexityBot not blocked in robots.txt

- Critical content renders without JavaScript

- Schema markup implemented and validated

- Core Web Vitals passing

What’s the SEO overlap, according to Google’s API leak?

- sourceType — Google tracks the relationship between where content is indexed and its value. Being in the primary index matters.

- Index tiers (Base/Zeppelins/Landfills) — Google calls their different storage buckets Base, Zeppelins, and Landfills. Quality determines storage priority. Low-quality or inaccessible content gets deprioritized… to the landfill.

- ChromeInTotal — Chrome usage data influences indexing decisions. Sites with real user engagement get crawled more frequently.

Why this matters for AI specifically

AI systems inherit the biases and limitations of their underlying indexes. If Bing’s crawler can’t render your JavaScript-heavy page, that content doesn’t exist for ChatGPT. Technical SEO has always mattered, but now it matters across multiple indexes simultaneously.

Tactic 4: Authority and Citation Worthiness

This is where things get harder to shortcut. AI systems need to cite sources that users will trust—which means they’re looking for signals of genuine authority and expertise.

Here’s what builds citation worthiness:

- Create original research and proprietary data. Nothing signals authority like data no one else has. Original surveys, case studies with real numbers, proprietary benchmarks—these give AI systems something unique to cite. If your content just synthesizes what’s already out there, you’re competing with everyone else who did the same thing. Synthesizing what’s already out there is now only one prompt away, so we need to raise the bar for our content.

- Establish clear authorship. Google’s leak revealed authorReputationScore as a direct input into quality models, and isAuthor/isPublisher flags that affect confidence levels. Make sure your content has clear bylines, author pages with credentials, and links to authors’ other work and profiles. Much like LLMs have embeddings for your brand, they have embeddings for your people, too.

- Build brand mentions across platforms. Brands present on multiple review platforms (G2, Capterra, Trustpilot, Sitejabber, Yelp) average 4.6-6.3 ChatGPT citations compared to 1.8 for brands without. That’s up to a 3.5x multiplier! Additionally, Ahrefs’ analysis of 75,000 brands found that YouTube mentions showed the strongest correlation with AI visibility ~0.737. This isn’t just about backlinks—it’s about your brand being part of the conversation across the web: review sites, YouTube mentions, podcast appearances, industry publications.

- Demonstrate genuine expertise and effort. Google’s contentEffort signal uses LLM-based estimation to assess how much work went into a piece. The Quality Rater Guidelines are explicit: “If very little or no time, effort, expertise, or talent/skill has gone into creating the MC, use the Lowest quality rating.” AI systems are likely using similar heuristics.

- Build comprehensive author and organization pages. These serve as entity hubs that help both Google and AI systems understand who you are and why you’re credible. Include credentials, experience, publications, speaking engagements, and external validation.

A counterintuitive finding on backlinks

Traditional SEO wisdom says more backlinks = more authority. But research on AI citations tells a different story: sites with 1-9 backlinks averaged 2,160 AI citations, while sites with 10+ backlinks averaged only 681.

This doesn’t mean backlinks don’t matter—it suggests that AI systems may weight authority signals differently than traditional search. Brand recognition and content quality might matter more than raw link counts.

What’s the SEO overlap, according to Google’s API leak?

- siteAuthority — Direct input into Google’s quality scoring. Site-level trust matters.

- contentEffort — LLM-based effort estimation. Google is literally using AI to assess how much work went into your content.

- OriginalContentScore — Scored 0-512. Uniqueness is measured and valued.

- authorReputationScore — Author credibility feeds directly into quality models.

“Build a notable, popular, well-recognized brand in your space.”

Rand Fishkin on the significance of the Google leak, Sparktoro

Why does brand matter so much? In Google’s systems, brand strength shows up everywhere: people typing your name directly into search, clicking your result over competitors, linking to you without being asked. The API leak revealed that Google tracks all of these behaviors and uses them to reinforce rankings for recognizable brands. For AI search, brand recognition may be weighted even more heavily—when an LLM cites you, it’s staking its own credibility on yours.

Bonus Tactic: Content Governance and Consistency

This one emerged from a client conversation and doesn’t have the same research backing as the other tactics. But the logic is sound, and the API leak supports it.

A client was training an internal AI chatbot on their website content. They discovered that inconsistent or conflicting information across their site confused the model and degraded the quality of its responses. The chatbot would sometimes give contradictory answers because the source material contradicted itself.

The same principle likely applies to how external LLMs evaluate your site as a source.

Think of it like NAP consistency for AI

In local SEO, consistent NAP (Name, Address, and Phone) across all citations is foundational. Inconsistencies confuse Google about which information is correct and hurt your local rankings.

For AI optimization, the equivalent might be consistent answers across your own content. If your pricing page says one thing, your FAQ says another, and an old blog post says something different—that’s a problem.

Here’s why:

- Confidence scoring. If your site contradicts itself, LLMs have lower confidence citing you. Why would they cite a source that might give users wrong information?

- Hallucination risk. Inconsistent source material leads to confused AI outputs. LLMs may simply avoid sources that introduce noise into their responses.

- Entity understanding. LLMs build representations of brands and products. Inconsistent information makes it harder for them to understand what you actually offer.

What’s the SEO overlap, according to Google’s API leak?

- chardVariance / chardScoreVariance — Google measures consistency across sites. Variance in quality signals hurts you.

- site2vec vectors — Google vectorizes entire sites and compares individual pages against the site’s overall embedding. Off-message content stands out.

- siteFocusScore + siteRadius — How far pages deviate from your core theme. Consistency matters.

A note on this tactic

This is an emerging best practice without direct research validation for AI search specifically. But the logic aligns with both the API leak signals and how LLMs process information. We’re treating it as a reasonable hypothesis worth acting on—and the downside of having consistent, accurate content across your site is… nothing. It’s just good practice.

Experimental & Emerging Tactics for AI Optimization

Preparing your website for AI agents

Google, Anthropic, OpenAI, Amazon, and others have all announced some form of AI agents. These aren’t chatbots. They’re systems designed to browse, compare, and eventually purchase on behalf of users. While current agents are hardly usable for most tasks, the trajectory is clear: when AI agents work well, humans will use them because humans prize convenience.

Here’s what this means practically:

1. Your API becomes a front door. As Microsoft’s Azure AI Foundry demonstrates, structured APIs are becoming how machines communicate with businesses.

2. Verifiable information beats persuasive copy. Agents making decisions need facts they can validate: pricing, specifications, availability, reviews. This doesn’t mean abandoning brand voice—it means ensuring your core claims are backed by verifiable data.

3. Your owned audience becomes your lifeline. If you’re building traffic, the AI wave is a threat. If you’re building an audience, you can survive. Email lists, SMS subscribers, app users, and community members become increasingly valuable as AI agents intercept the discovery layer.

I wrote more extensively about this in Optimizing for AI Agents: Preparing for the Post-Human Internet. The short version: the fundamentals remain—providing genuine value, building relationships, solving real problems—but the mechanics of how we accomplish this will undergo a dramatic shift.

Google’s Universal Commerce Protocol (UCP)

What It Is: Speaking of agents, Google announced UCP, an open-source standard designed for agentic commerce. It establishes a common language for AI agents and commerce systems to work together across the entire shopping journey. It’s sorta like MCP for AI tool use, but for eCommerce (read Google’s dev docs on UCP).

Here’s some key details about UCP

- Co-developed with Shopify, Etsy, Wayfair, Target, Walmart

- Endorsed by 20+ partners: Adyen, American Express, Best Buy, Mastercard, Stripe, Visa, etc.

- Compatible with existing protocols: A2A (Agent2Agent), AP2 (Agent Payments Protocol), MCP (Model Context Protocol)

- Will power upcoming checkout directly in AI Mode in Search and Gemini app

I’m still skeptical that most people will use AI to directly buy things. Especially products that require a high degree of consideration. Would you ask ChatGPT to go out and buy you a pair of shoes? Likely not (yet). However, people using AI to place grocery orders seems very possible.

Google is using Merchant Center as the main vehicle for AI discovery, including “branded AI agents” that retailers can activate. This means optimizing your product feed is about to get even more important.

LLMs.txt and LLMs-full.txt

What It Is: A proposed standard for AI website content crawling, created by Jeremy Howard (founder of FastAI/AnswerAI). Acts like a curated sitemap specifically for LLMs.

- llms.txt — Curated list of key URLs in Markdown format; guides AI to most important pages

- llms-full.txt — Complete site content in a single markdown file for easy context loading

- Placed in root directory (like robots.txt)

- Written in Markdown for machine readability

For llms-full.txt specifically, adoption remains niche but growing. According to llms-txt.io, over 784 websites have documented implementations as of late 2025, with notable adopters including Anthropic, Cloudflare, Stripe, Cursor, and Zapier—adoption is concentrated almost entirely in AI firms, developer tools, technical documentation, and SaaS platforms rather than mainstream sites.

The honest assessment: no major LLM search engine has officially announced support for the protocol, and SE Ranking’s analysis of 300,000 domains found no measurable correlation between having an llms.txt file and AI citation frequency. That said, it’s low effort to implement (plugins are available for AIOSEO and Yoast), and it’s most valuable for sites with APIs, developer documentation, or complex product information where giving AI systems a clear map of your content makes intuitive sense.

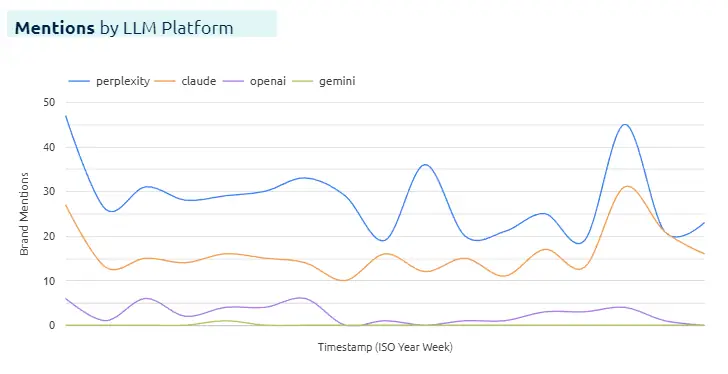

Measuring What Matters in the Age of AI

If traditional SEO has taught us anything, it’s that what gets measured gets optimized. But the metrics that defined success for a decade—rankings, organic sessions, click-through rates—don’t fully capture performance in AI search.

We need new measurement frameworks. Not to replace traditional metrics, but to complement them as AI becomes a larger part of how people discover information.

Share of Search: The Demand Signal

Share of Search measures the percentage of branded searches your company receives relative to competitors in your category. It’s calculated using Google keyword data: searches for your brand divided by searches for all brands in your competitive set, typically smoothed over a 6-12 month rolling average.

The research backing this metric is substantial. Studies from the IPA (Institute of Practitioners in Advertising) found that Share of Search represents approximately 83% of a brand’s market share. Les Binet’s original research showed Share of Search can predict market share changes 6-12 months ahead—making it one of the few leading indicators available to marketers.

Why does this matter for AI optimization? Because Share of Search is platform-agnostic. It measures underlying brand demand regardless of where that demand surfaces. If your optimization efforts are working, more people should be searching for your brand by name. Share of Search captures this regardless of whether the initial touchpoint was a Google result, an AI Overview, or a ChatGPT recommendation.

AI Visibility Tracking: The Authority Signal

While Share of Search measures downstream demand, brand mention and citation tracking measures upstream visibility—how often and where your brand appears in AI-generated responses.

This is an emerging category with new tools launching regularly. The core functionality: these platforms run queries across AI systems (ChatGPT, Perplexity, Google AI Overviews, Claude, Gemini, Copilot) and track when your brand is mentioned, which of your URLs are cited, and how you compare to competitors.

We’ve also built a lightweight version of this: Our AI Visibility Tracker lets you check how your brand appears across major AI platforms for your target queries. It’s a starting point for understanding where you stand.

AI visibility KPIs you should be tracking:

- Brand mention frequency — How often your brand appears in responses to relevant queries

- Citation rate — How often AI systems link to your content as a source

- Share of voice — Your mentions relative to competitors for the same queries

- Platform variance — How visibility differs across ChatGPT vs. Perplexity vs. AI Overviews

Bringing It Together

Neither metric tells the complete story alone. Share of Search is a lagging indicator of brand demand—it tells you what happened but not why. Citation tracking shows visibility but not business impact.

Add Share of Search and AI visibility tracking to your existing reporting. Track them monthly alongside your traditional SEO metrics. Look for correlations and divergences. Over time, you’ll develop a clearer picture of how AI search fits into your overall discovery ecosystem.

A Unified Framework for Optimization

The API Leak → AEO Bridge

The Google API leak gave us unprecedented evidence of what signals actually matter. While LLMs aren’t Google, they’re solving the same fundamental problem—determining what content is trustworthy, relevant, and valuable enough to surface to users.

The leak confirms what experienced SEOs suspected: there are no shortcuts. contentEffort is a signal. OriginalContentScore is a signal. Brand matters. Consistency matters.

The difference with AI search is that these quality signals may be weighted even more heavily—because when an LLM cites you, it’s staking its credibility on yours.

| Google Signal (Leaked) | What It Measures | AEO Parallel |

|---|---|---|

| siteAuthority / Q* | Site-level trust | Brand recognition, citation trustworthiness |

| contentEffort | Human labor invested | Original research, statistics, cited work |

| OriginalContentScore | Content uniqueness | Information gain, novel data |

| titlematchScore | Title-query alignment | Clear, extractable answers |

| leadingText / numTokens | Front-loaded content | Lead with the answer |

| QBST | Expected terms | Semantic completeness |

| NavBoost | User satisfaction | Content that fully answers |

| authorReputationScore | Author authority | E-E-A-T signals |

| chardVariance | Site consistency | Content governance |

The AEO Playbook Is Simpler Than It Seems:

- Create genuinely valuable content with original insights

- Structure it for extraction

- Maintain consistency across your site

- Build brand authority across platforms

- Make it technically accessible

This isn’t a call to abandon human-centered design. The most successful approach will balance both: content with emotional resonance for humans and factual verification for AI, customer journeys that account for AI research followed by human decision-making.