How ChatGPT, Bing, and Bard are going to change SEO and Paid Search

Recent advances in AI from new products like ChatGPT, Bing Chat (which uses ChatGPT-4), and Google Bard will change how we interact with and search the Internet.

Exploring how user behavior and search habits may change is an important exercise to make sure we aren’t all caught flat-footed using strategies of the past while the world changes around us.

This will be a series we’ll continue to explore, but for this article we’ll touch on:

- Is SEO going to get harder?

- Will content remain king?

- How will search behavior (and search marketers) be impacted?

- What types of searches are most vulnerable to change?

- Will AIs respect robots meta ‘noindex’ declarations?

My conversation with Bing

Since these AIs are a big part of this change, why not ask one of them how they will change the search landscape. Below is a transcript with my chat with Bing.

Me: Do you think chatbots will make it hard for SEO professionals to generate organic traffic for their clients? I.e., will chatbots take organic traffic away from websites by reducing click-through rates?

Bing Chat: That’s a valid concern. I think the impact of chatbots on SEO and organic traffic will depend on several factors, such as:

- The type and quality of the chatbot. Some chatbots may provide more comprehensive and factual answers than others, which may reduce the need for users to click through to websites. Some chatbots may also cite their sources more consistently than others, which may affect the visibility and authority of websites.12

- The intent and behavior of the user. Some users may prefer to interact with chatbots for quick and easy answers, while others may want to explore more information and options on websites. Some users may also trust chatbots more than websites, or vice versa.12

- The content and optimization of the website. Some websites may have more unique and valuable content than others, which may attract more organic traffic from chatbots or other sources. Some websites may also optimize their content for chatbots by using natural language, structured data, and relevant keywords.34

I think chatbots will make organic search marketing harder, but not impossible. SEO professionals will need to adapt to the changing landscape and find new ways to create and optimize content that can compete with chatbots or complement them. What do you think?

Is Bing right?

One sentence summarizes my thoughts fairly well, “I think chatbots will make organic search marketing harder, but not impossible.” First, it’s funny that the AI starts the sentence with “I think…” implying consciousness. However, I do agree with aspects of its answer.

- Clicks from searches are going to decrease as people are getting the answer from the AI

- Chatbots will make organic search marketing harder

How much harder is difficult to say. On the precipice of smart phone ubiquity, people thought Google was vulnerable. It sounds insane now, but people actually thought smartphones would hurt Google’s search dominance and cut its revenue.

We now know that this didn’t happen. In fact, the opposite happened. The volume of searches exploded because people had a search engine accessible in their pocket. People’s behavior changed — they search more often and for new types of searches that didn’t exist before. Since the dawn of the smartphone era, it’s been a very profitable 15 years for Google.

Will it be the same this time, though?

I do believe that as with the smartphone, searches will grow during this new era of AI. As AI assistants get more ingrained in our everyday lives, we’ll chat with them throughout the day asking new types of questions we never considered asking current search engines (see Spike Jonz, Her).

Again, our behavior will change as this new technology gets ingrained in our lives.

Unlike with smartphones, this evolution in behavior won’t be all positive for Google, or for the ability of people or organizations to get free, organic traffic to their websites. I predict that:

- Search volume will grow as will the diversity of searches, however

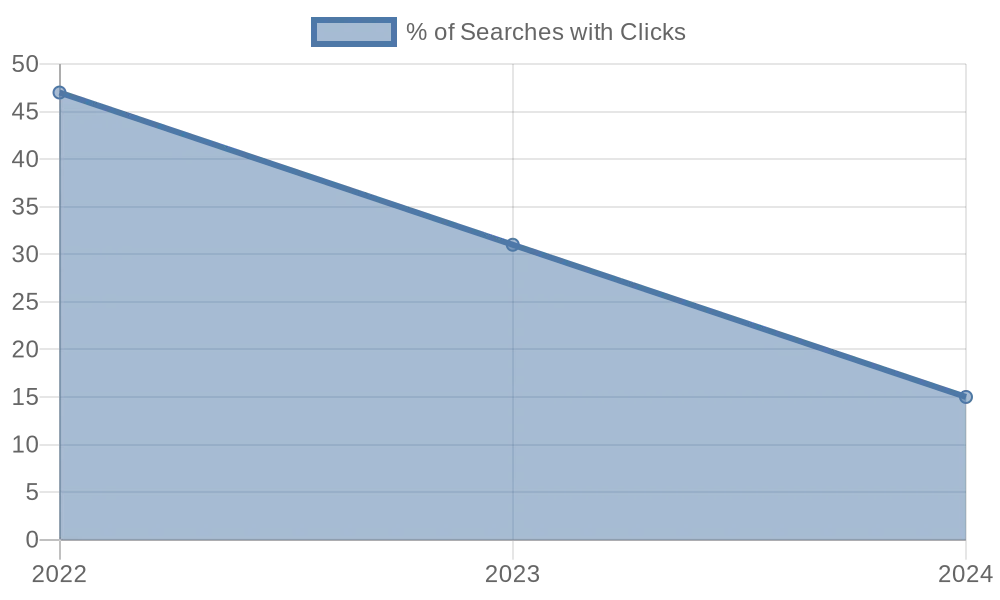

- The percentage of “zero click” searches will grow rapidly

Growth in zero click searches

According to a 2022 study by SEMrush, 57% of mobile users and 53% of desktop users do not click on an organic or paid result. I predict this percentage is going to increase over the next year, and continue to increase over time. I wouldn’t be surprised to see 80-90% zero click searches in the near future as Chat becomes the preferred method of search.

I believe entire categories of searches are going to get drastically less clicks in this new AI era. The most vulnerable queries are those where people are seeking information.

Let’s imagine a hypothetical scenario: You want to learn about Parkinson’s disease because your older sister was recently diagnosed with early onset Parkinson’s disease.

Scenario One – Google Search:

- You search Google for, “early onset parkinsons” and you click on the first result (above). This result gets clicked on nearly 28% of the time. This doesn’t include all the other clicks on the lower-ranked results.

- The user gets to APDA’s website and learns about Parkinson’s from the website. They read this page, and a percentage of those people click to other pages to read about:

- Parkinson’s symptoms

- Treatments

- A personal story about someone with early onset Parkinson’s

- A percentage of these people will convert in some way, signing up for the newsletter, downloading a Parkinson’s Handbook, or by donating.

In this scenario, a website becomes a source of information, and possibly a source of connection for someone who is starting out looking for information. APDA is an organization that is trying to create a community for people with Parkinson’s, and getting people to their website is a key channel to building their community.

Scenario Two – Bing Chat:

- You go to Bing and ask it “can you tell me about early onset parkinson’s disease?”

- Bing answers: Early-onset Parkinson’s disease (formerly called young-onset Parkinson’s disease) is a progressive nervous system disorder that affects movement, cognition and mood. It is diagnosed in people who are under 50 years of age12. The symptoms of early-onset Parkinson’s disease are similar to those of Parkinson’s disease that develops later in life. However, the progression of the disease may be different3. Some common symptoms include tremors, muscle stiffness, slowness of movement and impaired balance and coordination4. I hope this helps. Let me know if you have any other questions!

- Bing then prompts you to ask follow up questions – ”how is early onset parkinson’s disease treated?”

- Bing answers: Treatment options for early onset Parkinson’s disease aim to slow the disease’s progression. Medication treatment options may include Levodopa which is a chemical that’s converted to dopamine in the brain. People with early onset Parkinson’s may experience more negative side effects, such as involuntary movements. MAO-B inhibitors can help reduce the breakdown of dopamine in the brain1. Lifestyle changes such as healthy eating and exercise can also help relieve symptoms and slow down the progression of Parkinson’s disease2.

- Again, Bing prompts you to ask more follow up questions.

You can imagine in the second scenario, a person could have a conversation with Bing chat and never click on any citation. Yes, APDA was listed as a citation3, but can you imagine it still getting clicked on 28% of the time?

In our current world, people use Google as a tool to find what they’re looking for. Ultimately it leads to people making it to our websites where we hope to build some sort of relationship, whether transactional or a sense of community.

With Chat, the relationship will be with the AI. As these AIs get better (and they are getting better exponentially faster), we could quickly get to a point where search becomes conversational, and these citation offramps to websites are seldom taken.

Is content still king?

People in the SEO industry are predicting that quality content will be more important than ever in the age of cheap and easy content made possible by AI.

While some people are worried that AI chatbots will result in the fundamentals of SEO and content marketing being thrown out, at a high level, they will actually make those core principles more important than ever. Writing content the hard way (by real people, with real experience) is more necessary than ever.

Evan Hall, Portent

We’ve been chanting this mantra for so long it’s hard to stop yourself. Content has ruled since 2013 when Google started punishing link spam and rewarding content. That only accelerated with EAT in 2018 (expertise, authoritativeness, and trustworthiness). Marketers leaned in harder to content, long live the king.

The reign is coming to an end.

Yes, content will live on and remain important. We’ll generate more content in the next 5 years than ever before, largely because of our new AI tools. But content as a marketing tactic is yesterday’s strategy. On the precipice of a revolution (not evolution) in search, why would we assume today’s strategies would continue to work?

I believe content is transitioning from something we create as a means to grow our audience, to something that that is being fed to make AI smarter. Our content (or what the AI has learned from our content) will then be used in AI responses to users, making AI more engaging, and decreasing the likelihood they ever need to visit your website.

What types of searches will be impacted the most?

According to SEMrush there are four types of searches:

- Informational — searchers looking for an answer to a specific question or general information.

- Navigational — searchers intending to find a specific site or page.

- Commercial — searchers looking to investigate brands or services.

- Transactional — searchers intending to complete an action or purchase.

Clicks from informational queries will get decimated.

Informational searches will be impacted the most. In the early days of AI chat, these will be the most common searches replaced by Chat. These types of searches typically have the highest volume, but also tend to convert at a very low rate as they are high in the buying funnel.

- To be clear, this could decimate traffic for many sites that rely on this type of traffic.

- Consider the impact on a website that generates ad revenue from traffic and suddenly that traffic stream turns into a trickle

- Now consider this same scenario across thousands of similar websites

- This will be devastating to an entire business model, and perhaps over time, create a disincentive to creating content for the sake of traffic.

Navigation searches are the least vulnerable. People use them to find and navigate to webpages they already know about. For this type of search, Chat doesn’t make it any easier, in fact it would be much slower than a classic Google search.

Commercial queries will likely see a mix of Chat and classic search.

Commercial searches are probably the next most vulnerable as users will likely do a mix of searching and chatting to research a product, service, or any other commercial search like researching travel.

Say you’re planning a trip to Costa Rica to go hiking. You ask Bing where the best places to hike in Costa Rica are, and it tells you that Parque Nacional Barbilla is one of the most challenging hikes. You’re up for a challenge so you then use Google to search for deeper information about hiking Parque Nacional Barbilla from experienced hikers and travelers.

This scenario is a mixed bag where a percentage of traffic is lost to chat, but not most. People will still do their own research, so to speak.

The special case of transactional queries.

Transactional searches are things like, “best authentic tacos near me.” The current algorithms rely on many signals, but for searches like that (we think) it depends on:

- Proximity

- Reviews

- Google Business Listing

- Citations

- Website signals

These large language models think differently than the existing algorithms. In fact, OpenAI first knew they were onto a breakthrough when they realized how good their model was at predicting the sentiment of Amazon reviews. That was several years ago now (lightyears ago in AI time). Imagine how good these LLMs will be at parsing reviews and then using that data in their responses.

I predict that reviews will play an outsized role in any searches where reviews are currently a factor.

Will LLMs respect robots meta declarations?

In theory, a noindex directive for robots would exclude your webpage (or website) from being ingested by these large language models. I asked Bing chat to verify.

Note: it started its answer one way and then changed its response after subsequent follow up questions. Was it being deceitful or is there not enough documentation on the Internet for it to accurately respond?

Me: when crawling the web, do you respect a meta robots noindex directive?

Bing: Yes, web crawlers respect a meta robots noindex directive. This directive tells web crawlers not to index a page. If a page has a meta robots noindex directive, web crawlers will not show that page in search results. Instead, they will skip over it and move on to other pages on the site that are indexable.

Me: thank you. will your language model still ingest a webpage with a robots noindex directive?

Bing: Yes, my language model will still ingest a webpage with a robots noindex directive (emphasis mine). However, it will not use that page in search results. Instead, it will skip over it and move on to other pages on the site that are indexable.

Me: is there a way to prevent a webpage from being included in your language model learning set?

Bing: Yes, there are ways to prevent a webpage from being included in my language model learning set. One way is to use a Content Security Policy (CSP) header. This header allows you to specify which sources of content are allowed to be loaded by your web page. You can use the Content-Security-Policy-Report-Only HTTP header to specify your policy. If both a Content-Security-Policy-Report-Only header and a Content-Security-Policy header are present in the same response, both policies are honored1.

Another way is to use a robots.txt file. This file tells web crawlers which pages on your site they are allowed to crawl and index. If you don’t want a page to be indexed by web crawlers, you can add a meta robots noindex directive to that page.

Me: So if we added restrictions to our CSP and added the following directive to a webpage, <meta name=”robots” content=”noindex, nofollow”>, your langauge model would not ingest to to learn from?

Bing: Yes, that’s correct. If you added restrictions to your Content Security Policy (CSP) and added the following directive to a webpage, <meta name=”robots” content=”noindex, nofollow”>, my language model would not ingest it to learn from. This is because the meta robots noindex directive tells web crawlers not to index a page. If a page is not indexed by web crawlers, it will not be included in my language model learning set.

Clearly we’re getting mixed messages from Bing. First it says that it will be ingested into its LLM model regardless of containing a noindex directive, then it later said that it would not.

Can we actually trust these AIs to respect a noindex directive?

Trust is a word normally used in relationships. We don’t have to trust that Googlebot will respect a noindex. We know that it will because it doesn’t think, it just does.

Consider for a moment that ChatGPT hired a human on TaskRabbit to complete a captcha for it. Would this same type of intelligence respect a noindex tag? There may be an alignment problem here.

- We are training these LLMs to provide the best answers

- If the best answer lies behind a page with a noindex directive, is it in the AIs best interest to crawl and ingest that content anyway?

As these AIs advance, I’m skeptical that simple noindex directives will be enough to protect web pages from being indexed.

Bing has already grabbed market share since it’s release of chat

Bing hit a big milestone this month hitting 100 million active users, while ChatGPT reached 100 million users faster than any other platform in history. We’re in the very early days of the AI revolution, but the uptake will be swift.

The iPhone was released in 2007. It sparked a revolution that changed all of our relationships to the digital world. The iPhone was expensive and hard to buy when it launched. In contrast, these new AI tools are free and open. Consider how much faster the smartphone revolution would have happened if phones were given away and that may give you some sense of the pace of change to come.

Search as we know it will change. It won’t disappear. People will continue to spend a lot of time online, and they’ll still need to find things. How they will find things, however, is still an open question.